This page highlights how I approach regression, release validation, and automation while supporting cross platform products. My focus is always on reducing risk before deployment and improving long term stability.

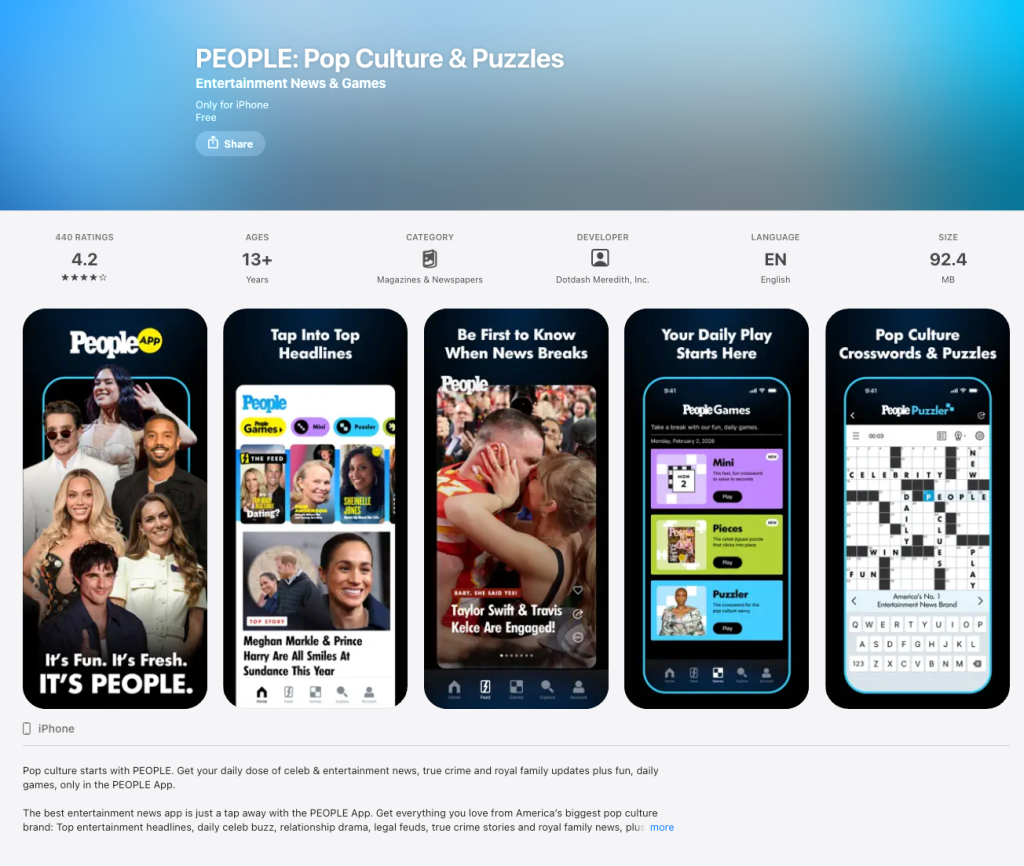

Foundational QA for the People App

Establishing Structured Regression

I was part of the early QA efforts supporting the People App, helping establish structured regression practices across iOS, Android, Web, and CMS integrations.

Being involved early required more than validating individual features. I focused on building consistency in how regression was executed before each release cycle. This included authentication flows, content rendering, discovery features, account functionality, and cross platform consistency.

Clear QA summaries, structured documentation, and environment tracking helped reduce ambiguity before sign off and supported predictable release cycles.

Feature Level Regression Matrix

Structured Coverage Across Platforms

To ensure repeatable and reliable coverage, I maintain a feature level regression matrix used during release candidate testing for iOS and Android.

This matrix tracks:

- Core feature areas and user flows

- Test scenarios and expected results

- Device and OS coverage

- Build versions under validation

- Testing status and notes

- Linked Jira tickets when issues are identified

Rather than testing reactively, this structured approach ensures coverage remains consistent across releases and reduces blind spots when features evolve.

The matrix is updated throughout testing and reused for future regression cycles, allowing quality efforts to scale as the product grows.

Regression as Risk Management

Validating What Matters Most

Regression is not simply re testing old scenarios. I treat it as structured risk validation.

For each release, I review scope and dependencies to identify high impact areas most likely to be affected. I prioritize cross platform consistency, backend integration validation, session handling, and environment specific behavior.

Maintaining detailed test notes and tracking edge cases helps streamline future regression cycles and improves issue triage when bugs arise.

This approach supports stability even as features evolve.

Learning & Contributing to Automation

Growing with Playwright

To complement manual regression efforts, I have been actively learning and contributing to automation using Playwright.

My focus has been understanding how automated tests validate high impact user journeys such as login and core navigation flows. I contribute by reviewing existing test coverage, assisting in expanding targeted areas, and running regression checks during pull requests.

Automation strengthens release confidence by catching repetitive regressions earlier in the development cycle. I continue building my skills in writing reliable tests and learning sustainable automation practices.

Collaboration & Communication

Reducing Uncertainty During Releases

Quality is collaborative.

I work closely with engineers, product managers, and content teams to clarify acceptance criteria and identify potential risks early. During release cycles, I provide structured updates outlining testing scope, findings, and readiness status.

When issues are identified, I document clear reproduction steps, environment details, and device information to support efficient resolution.

My goal is to create transparency and confidence so teams can ship predictably.

Growth Through Diverse Projects

Working Across Teams and Global Brands

Throughout my career, I have had the opportunity to work remotely with diverse teams across different countries and cultures.

Supporting large scale digital platforms and high visibility brands, including projects connected to CNN Travel and MGM Macau, has broadened my understanding of how digital systems operate at scale.

Collaborating across nationalities and disciplines has strengthened my communication skills and reinforced the importance of structured validation in high impact environments.

I am genuinely grateful for the opportunity to contribute to complex projects while working remotely. These experiences continue to shape how I approach quality: with responsibility, clarity, and continuous learning.